Zero-Shot Terrain Context Identification and Friction Estimation

Combining foundation models with clustering for zero-shot terrain identification.

Autonomous Vehicles Must Adapt On the Fly

- Traversing unknown or varying terrain is a fundamental obstacle for robust autonomous vehicle operation.

- Our approach eliminates the need for pre-mapped terrain information, enabling true adaptability in new environments.

Learning Terrain Types Without Supervision

-

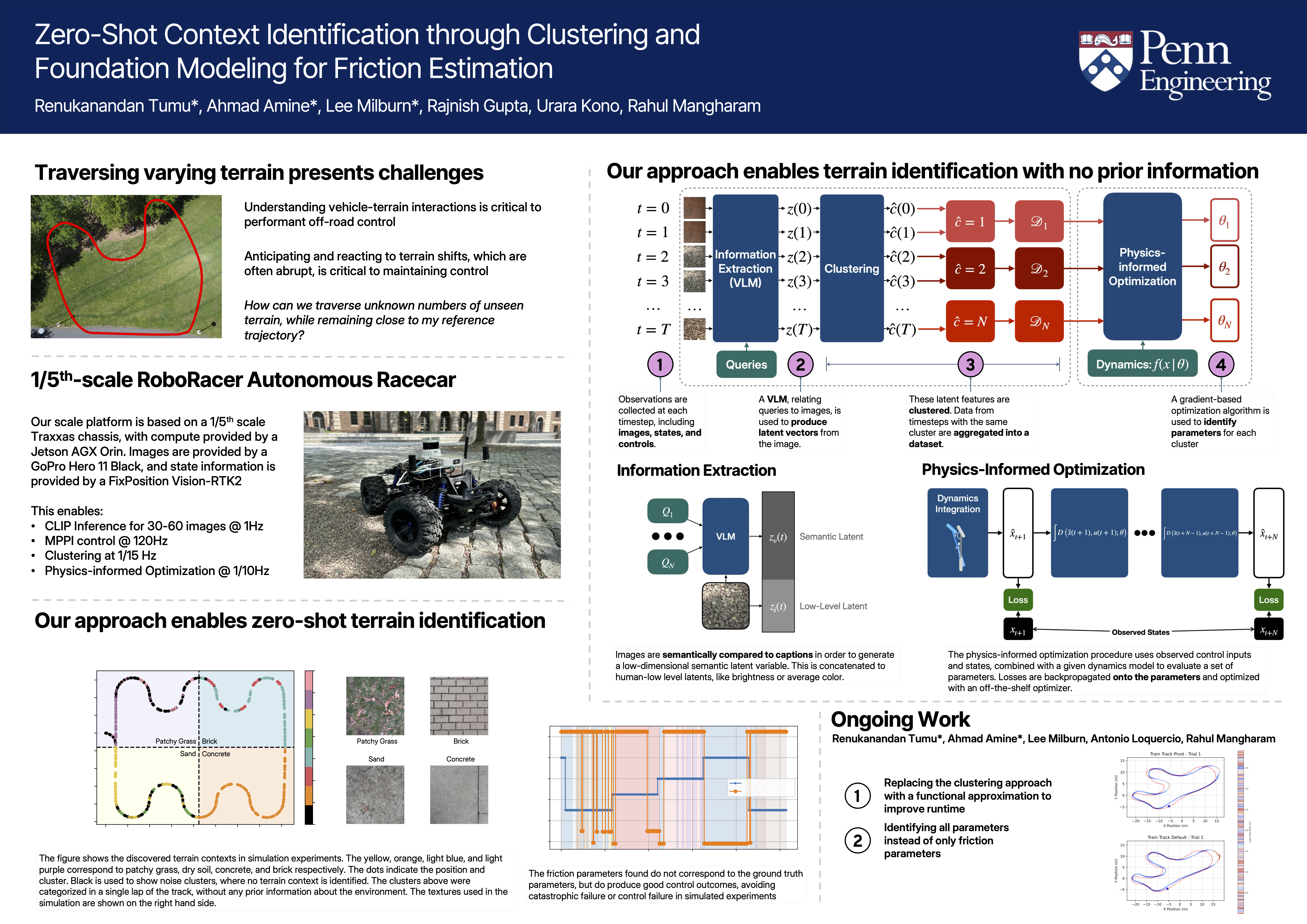

Continuous Multi-Modal Sensing

The vehicle records images, state, and control data at each timestep, building a rich dataset during real operation. -

Extracting Meaningful Semantic Features

A Vision-Language Model (e.g., CLIP) translates images into semantic latent vectors by matching them to text-based queries. These are enhanced with basic visual features (like brightness and color) for additional context. -

Unsupervised Clustering for Terrain Discovery

Latent features are automatically grouped into clusters—each representing a unique, discovered terrain type – without manual labeling or prior knowledge. -

Physics-Informed Optimization for Actionable Parameters

For each terrain cluster, a gradient-based optimizer fine-tunes friction and related parameters, using a differentiable vehicle dynamics model to backpropagate losses from observed driving behavior.

Our Approach can be Deployed on Real Hardware

- Vehicle Platform: 1/5th-scale RoboRacer (Traxxas chassis)

- Computation: Jetson AGX Orin (onboard GPU)

- Vision: GoPro Hero 11 Black (240 Hz video)

- Localization: FixPosition Vision-RTK2 (precise state estimation)

Real-Time Operation is Practical

- Semantic inference (CLIP): 30–60 images/sec @ 1 Hz

- Control (MPPI): 120 Hz

- Clustering: ~0.07 Hz (1 per 15 seconds)

- Physics-informed optimization: 0.1 Hz (1 per 10 seconds)

Effective Control Even with Imperfect Estimation

- The system autonomously distinguishes between multiple terrain types (e.g., patchy grass, dry soil, concrete, brick) in a single lap, with zero prior knowledge of the environment.

- Estimated friction parameters, while not always numerically identical to ground truth, enable effective and stable control, preventing failures in simulation.

- The clustering-based approach allows the controller to adapt quickly to abrupt terrain changes.

Next Steps: Toward Adaptive Off-Road Autonomy

- Accelerated Context Recognition: Replace clustering with a function approximation for near-instant terrain identification.

- Comprehensive System Identification: Extend to estimate all model parameters, not just friction.

- Generalization: Handle an unknown number of new terrain types and maintain robust control through abrupt changes.

Takeaway:

Our method demonstrates that vision-language foundation models, combined with physics-informed optimization, empower autonomous vehicles to adapt to unseen terrain in real time—without human supervision or advance mapping—unlocking new possibilities for robust off-road autonomy.

Paper Link

Enjoy Reading This Article?

Here are some more articles you might like to read next: