Conformal Prediction for Robotics

Developing conformal prediction methods for uncertainty quantification in robotic systems

Overview

This research direction explores the application of conformal prediction techniques in robotics. Conformal prediction provides a framework for quantifying uncertainty in predictions, which is crucial for robotic systems operating in dynamic and uncertain environments. While these techniques are widely studied in machine learning, their application to robotics is still emerging. This work aims to bridge that gap by developing conformal prediction methods tailored for robotic applications such as perception, control, and decision-making.

Data-Driven Non-Conformity Scores

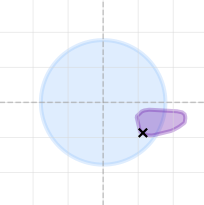

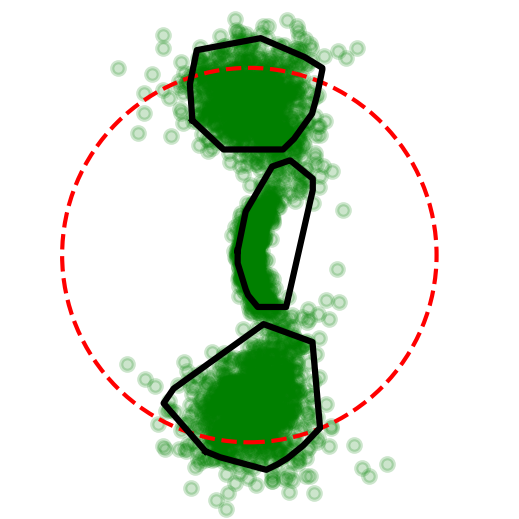

One critical problem in conformal prediction is the design of non-conformity scores, which measure how well a new data point conforms to the training data. Traditional approaches often rely on simple distance metrics or residuals, which may not capture the complex relationships in robotic data. This research direction focuses on developing data-driven non-conformity scores that leverage machine learning models to better capture the underlying structure of the data, leading to more accurate and informative prediction sets.

A python library for data-driven non-conformity scores is available at conformal_region_designer.

Selected publications:

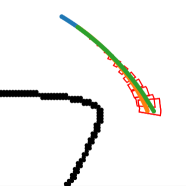

- Physics Constrained Motion Prediction with Uncertainty Quantification [1]

Renukanandan Tumu, Lars Lindemann, Truong X. Nghiem, Rahul Mangharam

Intelligent Vehicles (IV), 2023 - Multi-Modal Conformal Prediction Regions by Optimizing Convex Shape Templates [2]

Renukanandan Tumu, Matthew Cleaveland, Rahul Mangharam, George Pappas, Lars Lindemann

Learning for Dynamics and Control (L4DC), 2024 - AdaptNC: Adaptive Nonconformity Scores for Uncertainty-Aware Autonomous Systems in Dynamic Environments [3]

Renukanandan Tumu, Aditya Singh, Rahul Mangharam

arXiv, 2026

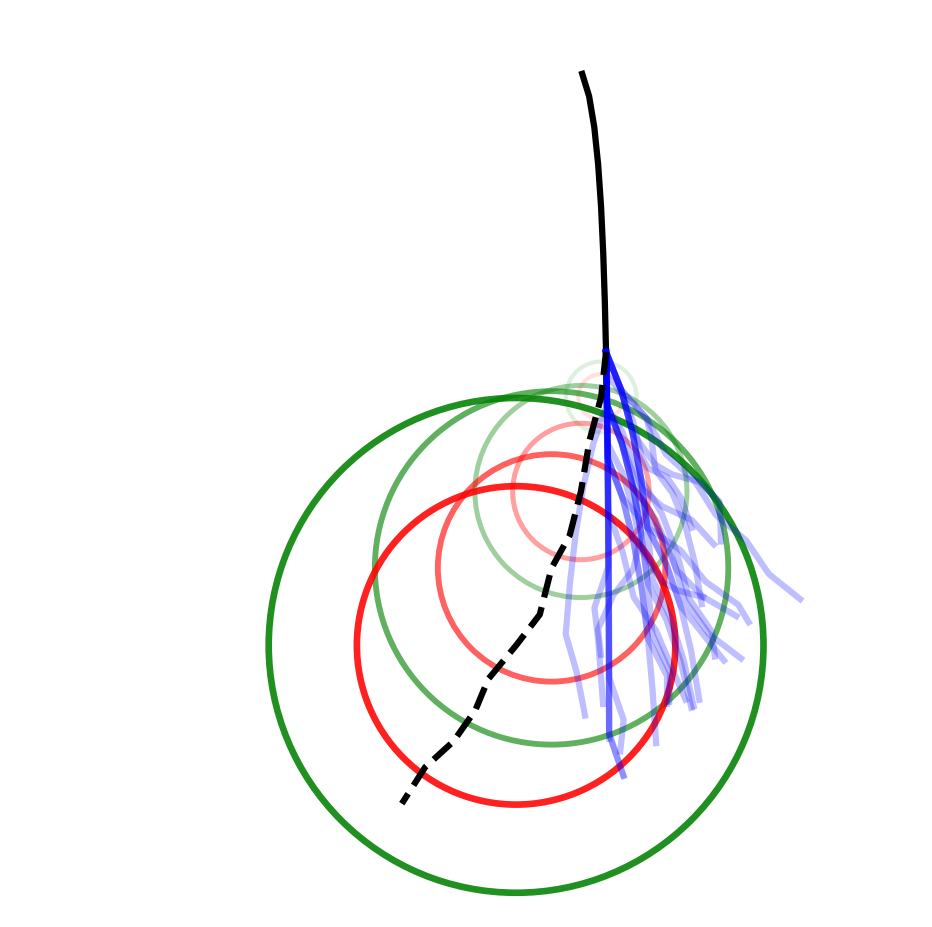

Conformal Prediction in Multi-Agent Settings

Another critical problem in the application of conformal prediction to robotics is overcoming distribution shifts that arise in multi-agent settings. In such scenarios, the behavior of one agent can significantly influence the environment and the data distribution observed by other agents. This research direction focuses on developing conformal prediction methods that adapt to these distribution shifts, ensuring that prediction sets remain valid and informative even as interactions evolve.

Selected publications:

- Conformal Off-Policy Prediction for Multi-Agent Systems [4]

Tom Kuipers, Renukanandan Tumu, Shuo Yang, Milad Kazemi, Rahul Mangharam, Nicola Paoletti

Conference on Decision and Control (CDC), 2024 - AdaptNC: Adaptive Nonconformity Scores for Uncertainty-Aware Autonomous Systems in Dynamic Environments [3]

Renukanandan Tumu, Aditya Singh, Rahul Mangharam

arXiv, 2026

References

2026

2024

-

Conformal Off-Policy Prediction for Multi-Agent SystemsIn 2024 IEEE 63rd Conference on Decision and Control (CDC), 2024

Conformal Off-Policy Prediction for Multi-Agent SystemsIn 2024 IEEE 63rd Conference on Decision and Control (CDC), 2024