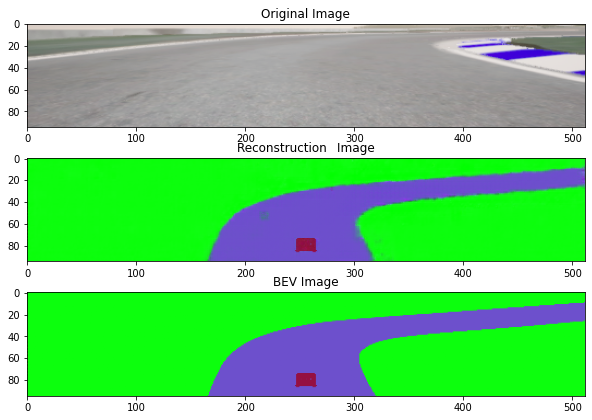

I want to share with you how I built a neural network to go from this:

To this:

In words, our task is to take the input image, a front camera view of the track ahead, and construct a birds-eye view of the track instead, which has been segmented such that the track and off-track components are colored differently.

Extracting information about where the track is going to go is pretty difficult from just the input image, as a lot of our future track information is compressed into the top 20 pixel rows of our image. The birds-eye view camera is able to express that information about the track ahead in a much clearer format, one that we can more easily use to plan the behavior of the car.

This makes reconstruction task is important, because we will not always have access to the birds-eye view camera. If we can reconstruct these birds-eye images correctly, it allows us to plan with more clearly expressed information. An additional benefit is the dimensionality reduction we get. We are effectively representing the whole image as a set of 32 numbers, which takes up considerably less space than the full image. We can use this decreased dimensionality as an observation space for a Reinforcement Learning algorithm if we so desire.

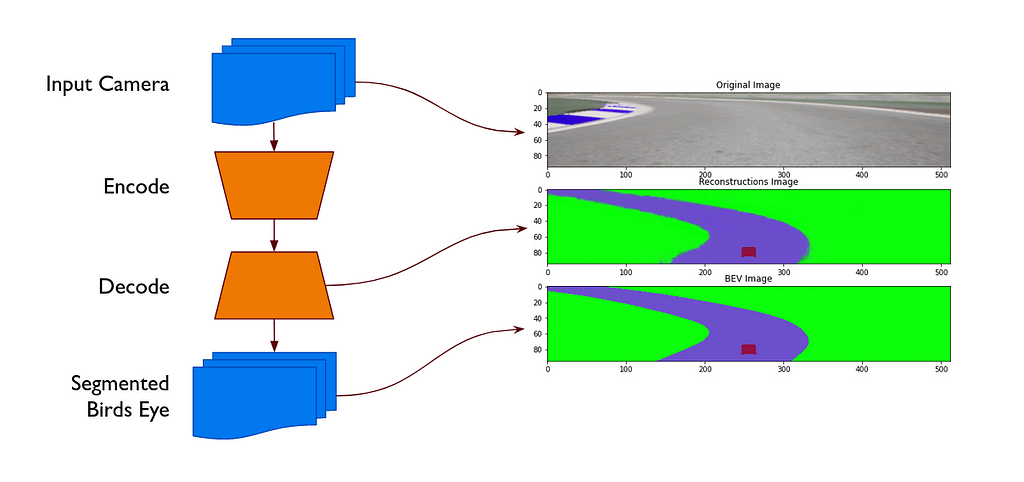

We leverage past advances in a tool called Variational Autoencoders (VAEs), to help us accomplish this task. In plain English, we take the image, compress it down to a latent space of 32 dimensions, and then reconstruct our segmented birds-eye view. The exact network architecture is expressed in PyTorch code at the end of this article.

To train this, we collect a series of images from the car, both the front camera, as well as our bird’s eye camera. We encode them with the encoder, which is a series of convolution layers. Next, we drop the dimensionality to our target size with a fully connected layer. From this, we use the decoder to reconstruct our image with a series of deconvolution layers.

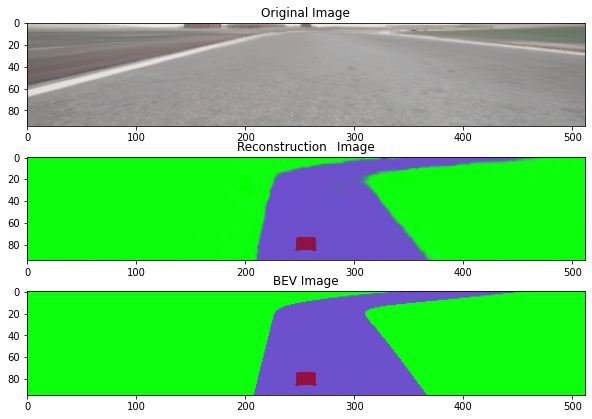

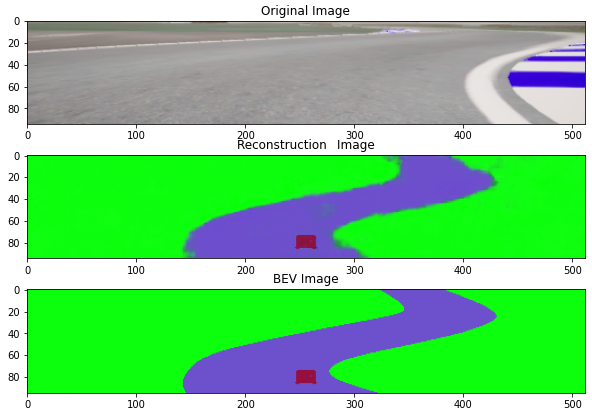

At the end, we have a method which is able to generate the reconstructions we see below!

While we can see some noise in the reconstruction, it captures the spirit and the overall curves of the track ahead quite well. Our team was able to use this method, as well as extensions to it to help us develop autonomous racing control methods in the AICrowd Autonomous Racing Challenge!